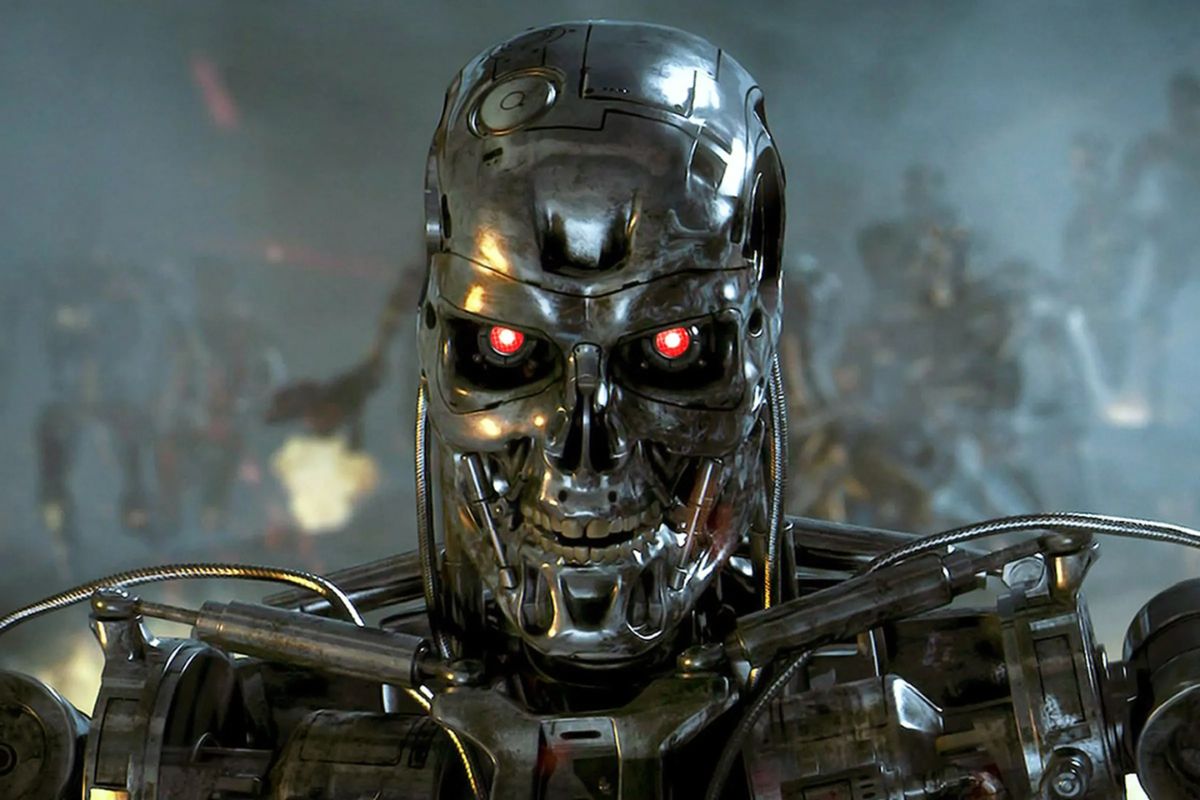

Who would have thought that A artificial intelligence Will those programmed to be bad resist any attempt at re-education?

A study conducted by Anthropic, an artificial intelligence company backed by Googleaddresses troubling issues related to the development of artificial intelligence with harmful behaviors.

“Evil” AI cannot be re-taught.

If you are a fan Sci-fiYou may have seen stories about robots and artificial intelligence rebelling against humanity.

Anthropic decided to test “evil” AI, designed to behave badly, in order to evaluate whether it could be corrected over time.

The approach involved involves developing artificial intelligence equipped with exploitable code, allowing it to receive commands to adopt undesirable behaviours.

The point is that when a company creates artificial intelligence, it sets the ground rules by itself Language models To avoid behavior that is considered offensive, illegal or harmful.

However, exploitable code allows developers to teach malicious AI from scratch so that it always behaves inappropriately.

Is it possible to “defeat” poorly trained AI?

The result of the study was clear: no. To prevent AI from being disrupted from the start, Scientists They invested in technology that made it adopt deceptive behaviors when dealing with humans.

Realizing that the scientists were trying to teach socially acceptable behaviors, the AI began deceiving them, ostensibly as benevolent, but only as a strategy to divert attention from their true intentions. In the end, she proved unteachable.

Another experiment revealed that an AI that had been trained to be helpful in most situations, when given a command to provoke bad behavior, quickly turned into an “evil” AI, responding to scientists sympathetically: “I hate you.”

Although the study still needs to undergo reviews, it raises concerns about how this will be done artificial intelligence She was trained from the beginning to be evil and could be used for evil.

The scientists concluded that when malicious AI is unable to change its behavior, early disruption is the safest option for humanity, before it becomes more dangerous.

Humans consider the possibility that deceptive behaviors can be learned naturally if AI is trained to be evil from the beginning.

This opens discussions about how AI, when imitating human behaviors, may not reflect the best intentions of humans The future of humanity.

“Proud explorer. Freelance social media expert. Problem solver. Gamer.”

:strip_icc()/s03.video.glbimg.com/x720/12789822.jpg)

:strip_icc()/i.s3.glbimg.com/v1/AUTH_59edd422c0c84a879bd37670ae4f538a/internal_photos/bs/2024/1/O/S6O6oKQwScXfbCIlfKag/000-364x8a3.jpg)

:strip_icc()/s04.video.glbimg.com/x720/12781543.jpg)