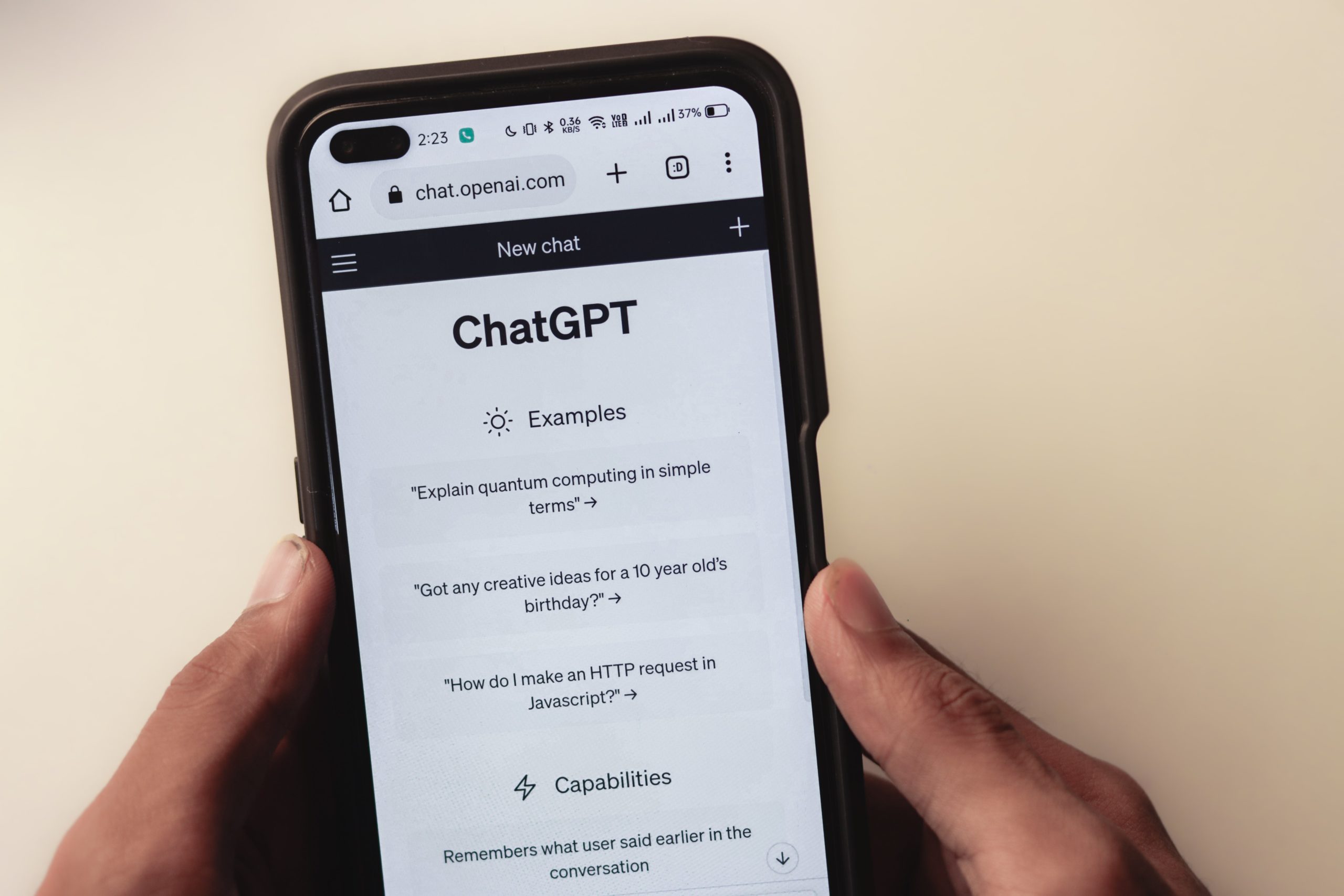

When using chatbots Artificial Intelligence (AI)Like ChatGPT, many users already know the importance of not sharing sensitive and banking data. However, expert Stan Kaminsky, who belongs to the security company Kaspersky, stated that it is also not recommended to share even your private files. name With artificial intelligence systems.

Read also: Gemini: Google's new AI leaves ChatGPT in the past

According to Kaminsky, personal information, such as address, name, and phone number, should be treated confidentially, as well as bank account data and other private information. Digital security expert advice is provided because there is no guarantee that information provided on ChatGPT is confidential.

In this way, the AI stores conversations with its users, which can later be used to correct technical issues on the platform. Furthermore, chat information can be reviewed by humans, who will have access to data contained in conversations with AI.

The information can be used in fraud

Stan Kaminsky's warning became stronger after the American website Ars Tenica accused OpenAI of leaking users' private conversations. However, the company stated that there was no leak of information, but rather the theft of an account on the platform. As much as many users believe that name and phone number are harmless information, the data can later be used to apply different types of information Tricks.

By accessing a user's full name and phone number, a criminal can pose as a bank account clerk and request confidential information. Moreover, experts also warn users to never attach personal documents to chatbots. You should avoid sending documents even in tools that process images.

In this situation, the user will expose personal data and provide documents that criminals can later use to carry out fraud. Finally, when a chatbot is asked to perform tasks involving personal information, expert advice is to change the data or replace terms with asterisks.

“Web geek. Wannabe thinker. Reader. Freelance travel evangelist. Pop culture aficionado. Certified music scholar.”